Langston,

Cognitive Psychology, Notes 5 -- Imagery

Note: For a lot of this I relied on a very nice essay by Bruce

(1996). Her edited volume Unsolved Mysteries of the Mind

doesn't

really play up the mystery part as much as you'd expect, but it

presents

a nice discussion of some of the debates in Cognitive Psychology.

I. Goals.

A. Where we are/themes.

B. Measuring imagery.

C. Images and memory.

D. Using images.

E. Structure of images.

F. Other kinds of images.

G. Reality monitoring.

II. Where we are/themes. It's hard to fit imagery

into the course as a separate unit since it goes with so many other

areas.

I'm leaving it separate because going over how imagery interacts with

the

other systems will serve as a good review. Basically, our

questions

are:

- What is a mental image?

- What do mental images do for you?

Here's a little exercise (from Bruce, 1996). Imagine a dinner

plate.

There's some spaghetti around the top rim. Just below that are

two

fried eggs side by side. In the middle is a carrot, pointing

down.

Below the carrot is a banana. How many people have a clear mental

image? What do you see there?

Do people use images? This is a perfect cognitive psychology

question. It's the ultimate kind of mental event. Note that

demonstrating that people do use images puts all of our methodology to

the test.

On the surface, it's obvious that imagery exists. Here's a simple

example from Descartes. Imagine a hexagon and a pentagon.

Can

you mentally see the difference between the two? Now, imagine a

999

sided polygon and a 1000 sided polygon. Can you see that?

You

can conceive of such things, but you can't image them, suggesting that

imagery is something different from language and other thought

processes.

Some big debates:

A. Can you have imageless thought? Is it possible to have

a thought not accompanied by a visual, verbal, or tactile image?

Does it matter?

B. What is a visual image? Is it a picture in the

head?

Activation of neural circuits used for vision? Something else?

C. How do you know what's real? We'll finally tackle some

issues raised in the first lecture by asking how you know you're

remembering

an image and not something that actually happened.

Top

III. Measuring imagery. Most models of intelligence

acknowledge that there are multiple intelligences. You can be

verbally

strong, but spatially weak, and vice versa. In other words,

knowing

a person's verbal ability doesn't necessarily tell you anything about

their

spatial ability.

How can we assess your imagery? There are lots of tasks.

Here are three sampled from a book on intelligence by Guilford (1967).

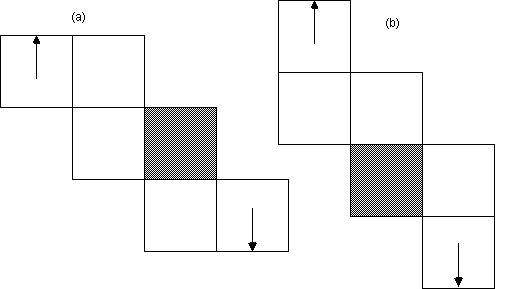

A. Cube folding. I have some expanded cubes with

arrows.

Mentally fold the cubes and tell me if the arrows will touch.

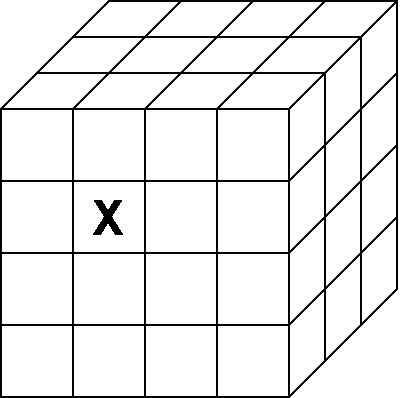

B. Cube task. I show you the initial picture of an ‘X’

on a cube. Then, I take away the cube and tell you how the X

moves.

You tell me where it ended up.

Demonstration: There are two:

1. Cube folding. Will the arrows touch? The first

is “yes,” the second is “no.” I have some you can fold if you

want

to check.

2. Cube: Here's a cube. Here are the moves:

One down, one back, one down, one left, one back, one right, one up,

one

forward, one forward, one up. Where is it?

What can we learn from these types of tasks? Just like digit

span can tell us about short term memory capacity, this can tell us

about your spatial ability. There seems to

be a lot more variability in spatial ability than verbal ability (in

college

populations). Why? Think about the skills emphasized and

the

tests used for admissions.

Note the parallel to working memory. All of these tasks require

storage plus processing.

Top

IV. Images and memory.

A. Learning pictures.

1. Shepard (1967): People saw a long list of pictures or

words (612). After two hours, they took a recognition test (two

alternative

forced choice). People were nearly 100% accurate for pictures,

about

88% for words. After a week, people were about 88% for

both.

So, picture memory is better than word memory, but not after a long

delay.

2. Standing (1977): Learn 1000 words, 1000 simple pictures,

and 1000 bizarre pictures. Recognition was tested two days

later.

People learned 615 words, 770 pictures, and 880 bizarre pictures.

So, people are really good with pictures, and better than with

words.

This is part of the evidence that will lead Paivio to propose a dual

code

(one verbal one visual). We'll see that later.

B. Using imagery to learn other stuff.

1. The concrete-abstract dimension. Words vary on a lot

of characteristics. One is how concrete the word is (“computer”

vs.

“thought”). What effect does concreteness have on memory?

Concrete

words are easier to recall than abstract words. I have a

demonstration

of this.

Demonstration: Here's a list of pairs. Some are

pairs of concrete words, some are pairs of abstract words. Learn

them, cued recall. Should remember more concrete words.

Why? Paivio thinks it's a dual code thing. You have a

picture

code that's separate in memory from your word codes. When you get

a concrete word, you can get an image in the picture code, that gives

you

two chances to recall. If you get an abstract word, you only get

one chance to recall (verbal code) and that makes it more likely that

you

will forget. This dual code also explains why picture memory is

better

than word memory. Two codes = twice the chances to find what

you're

looking for.

One source of evidence for dual codes is the symbolic distance

effect.

The basic effect is that it is harder to make decisions about the

relative

orderings of things the closer they are along a dimension. For

example,

think of a flea, a fly, a rabbit, a German Shepard, and a horse.

People's response times to decide which is larger are faster for

comparisons

like horse and flea than for comparisons like fly and flea. The

closer

the objects are, the harder it is to decide. This is best

explained

as an image thing. People access a mental representation that is

an analog of the real thing, compare it to another image, and “see” the

result.

One source of evidence for Paivio is that picture symbolic distance

tasks are faster than verbal symbolic distance tasks. The picture

can access the correct mental system directly, the verbal task has to

be

recoded before a comparison can be made, making pictures faster.

Note that on one hand a symbolic distance effect is kind of nuts.

If I describe an ordering to you that includes the sentence “the fly is

larger than the flea” and then ask you to verify the statement “the fly

is larger than the flea” it takes you longer than a statement about the

fly and a horse even though it's the exact same sentence. That

would

lead some to say that verbal and image systems are clearly separate.

C. Putting imagery to work. Mnemonic devices (memory

tricks)

usually take advantage of imagery. A popular one is

peg-words.

You first memorize a list that goes from one to ten (one is a horse,

two

is a picture, ...). Then, when you have a new list to learn (like

your shopping list) you imagine the items on the list interacting with

your peg words. So, if you need to buy a toothbrush, you might

imagine

a horse brushing his teeth. When you get to the store, you start

in “one is a horse” and that triggers the memory of the image, and you

buy a toothbrush.

Demonstration: I'll hand out some peg-word systems.

Practice until you've completely learned your system. Here are

the

three systems:

| One is a bun |

One is a door |

One is truth |

| Two is a shoe |

Two is heaven |

Two is data |

| Three is a tree |

Three is a gate |

Three is a message |

| Four is a door |

Four is sticks |

Four is love |

| Five is a hive |

Five is a hen |

Five is knowledge |

| Six is sticks |

Six is a bun |

Six is fun |

| Seven is heaven |

Seven is a tree |

Seven is time |

| Eight is a gate |

Eight is a hive |

Eight is have |

| Nine is a line |

Nine is a shoe |

Nine is beauty |

| Ten is a hen |

Ten is a line |

Ten is focus |

Then, memorize the shopping list. Then recall. Compare.

One should be best, followed by two, followed by three.

Why?

One has rhyming plus imagery (the words are all concrete). Two

has

imagery, but no rhyming. Three has neither. By the way, one

is the real peg-word system if you

want

to use it.

Top

V. Using images. Now we come to the real

problem.

I can't see you having an image. So, the data I'm about to

present

are based on a great deal of trust that participants are really doing

it.

The basic question is to look at the relationship between pictures and

images.

A. Mental rotation. If you physically rotate something

on a table, the farther you go, the longer it takes. Do images

work

this way?

CogLab: Mental

rotation exercise.

It should work. Shepard has found this effect in a number of

studies. The farther you rotate, the longer it takes, and the

relationship

is pretty linear.

B. Scanning. If I ask you to visually scan from one spot

to another, the farther you go, the longer it takes. Kosslyn had

people memorize an island, and he found a linear relationship between

distance

and time.

Another experiment manipulated instruction set (Kosslyn, 1976).

Some people were instructed to use images to help answer questions,

others

had no instructions. Imagery people were faster to answer

questions

if the size of the item probed was larger (does a bee have

wings).

Non-imagery people were faster on the basis of association strength

(does

a bee have a stinger). So, focusing on images seems to access a

different

kind of information.

Top

VI. Structure of images. What is an image?

Phenomenologically, what does it feel like? What might it

be?

Again, since we can't see it, this is entirely speculative. But,

here are two options:

A. Images are propositions. A proposition is an idea

unit.

It's basically verbal in nature, but it isn't words or

linguistic.

It's a kind of language of thought. So, images feel like

pictures,

but they're really coded in a different kind of language. The

feeling

isn't real.

B. Images are pictures. Sort of like what we've been

building

so far. On one level, this is absurd. A tomato is

red.

A picture of a tomato is red. Is an image of a tomato red?

No. Is an image of an elephant physically larger than an image of

a rabbit? Some of the scanning stuff certainly suggests this

(“think

of a rabbit by a fly, think of his eyelash” is easier than “think of a

rabbit by an elephant, think of his eyelash”).

C. How can we tell if an image is like a picture? Well,

we could look for cases where pictures and images are different.

Let's try some of that.

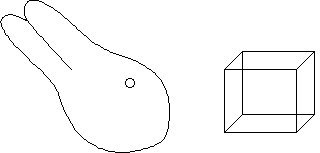

1. It's hard to reverse an image, it's easy to reverse a

picture.

Look at the drawings below:

The one on the left could be a rabbit or a duck. You should be

able to see it both ways. The cube on the right can flip which

face

is the front. Again, you should be able to see it both

ways.

Now, get an image, hide the paper, and try to flip it. It should

be hard, and you might not be able to do it. So, images and

pictures

do differ.

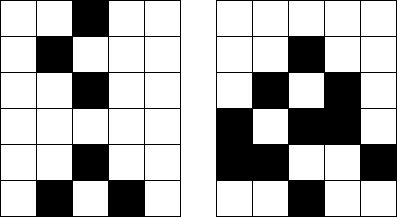

2. It's hard to decompose an image. Try the demonstration.

Demonstration: Are images like a picture in the

head?

If so, then activities that a picture can support should also be

supported

by an image. Let's see (based on Reed and Johnsen, 1975).

I'm going to show you a figure. Memorize it and get a clear

mental

image. When you're ready, raise your hand. Now, I'll cover

it up and ask you if certain shapes are part of the figure. Write

down the letters of all of the shapes that are in the figure.

Show

that people are usually bad.

Let's try number two. Bad again.

Most people make a lot of mistakes. Again, images and pictures

are different.

However, Finke, Pinker, and Farah (1989) argue that image

reinterpretation

is possible, it just depends on the kind of processing.

Overcoming

low-level grouping information is hard, higher level organization

should

be possible. An example: Think of a lower-case k.

Now,

imagine a circle around the k, just not touching it. Now, cut off

the lower half of the k. What do you have? A lot of people

can do this sort of thing. The rabbit and reinterpretation

demonstrations

are a different sort of processing.

D. Maybe images use perceptual hardware (it's like seeing, only

fainter). So, an image is basically utilizing the same hardware

that

you use for perception. This makes it qualitatively and

phenomenologically

different from processing verbal information, but still ties it to a

realistic

neurological foundation.

1. We can start by looking at interference. This is popular

with cognitive psychologists. If images use visual hardware and

words

use verbal hardware, pictures and words shouldn't interfere. If

they

all use the same hardware, pictures and words should interfere with

each

other. Try the demonstration.

Demonstration: Are images really verbally coded (like

propositions)? We should get different patterns of interference

if

images and verbal codes are different. Let's try (this is based

on

Brooks, 1968). I have some letters. The task is to go from

the first corner around the letter clockwise. At each corner,

tell

me “yes” if it's an outside corner and “no” if it's an inside

corner.

You will respond based on a mental image.

There are two response modalities, visual and verbal. If I assign

you to verbal, you whisper your “yes” and “no” answers. If you're

visual, you look at the ‘Y’ or ‘N’ on the correct row depending on what

corner you're on. If images are visual, visual responders should

be slower (more interference). To let us know, I'll split the

class

in half. Clap when you're done. The verbal half of the room

should clap first.

Now, let's look at verbal codes. I have some sentences.

For each word, respond “yes” if it's a noun or pronoun, “no”

otherwise.

We'll have the two modalities again. In this case, the verbal

modality

should be interfered with.

We should get more interference between pictures and pictures than

pictures and words and vice versa. So, they seem to use different

hardware. More importantly, it's a case where images and

perceptual

mechanisms seem to share resources.

2. Finke and Kosslyn (1980) measured acuity in visual and

imagined

fields and found the same elliptical visual fields in both

domains.

In both cases, better acuity was below the horizontal axis than

above.

The findings were not consistent with people's beliefs about their

imaginal

visual fields, suggesting that the results weren't due to demand

characteristics.

Spatial frequency resolution also followed this pattern (Finke &

Kurtzman,

1981). These results suggest a similarity between visual

perception

and imagery.

3. Imagery doesn't seem to produce after-effects like vision

does. For example, staring at red bars and then looking at a

white

background will cause you to perceive faint green bars. Imagining

red bars will produce no such after-effect (maybe?).

4. Imagery also doesn't seem to lead to repetition priming

effects.

For example, imagining words in lower case doesn't seem to improve

perception

of those words. This is a relatively new area of research, so

maybe

something will emerge here.

5. Imagery supports high level redescription, but not low level

grouping (see above). Vision supports both.

E. The conclusion: It will be hard for us to tell what

images are using purely cognitive methods.

One approach to solving the imagery debate (the “what are images”

question)

comes from neuropsychology. You can get a look at a person's

brain

as they form a mental image vs. do a verbal task. Are the same

parts

of the brain involved in the two tasks? Do images actually use

visual

cortex like perception? It's sort of a mix between cognitive

approaches

and neurological approaches.

Another possible resolution may come from additional interference

experiments.

Basically, if you tie up the parts of the perceptual system that

perceive

a particular kind of stimulus, that should hurt imagery as well.

If you get a consistent pattern of this kind of result, it would

suggest

a relationship between imaginal and perceptual systems.

Top

VII. Other kinds of images. I've been acting like

visual images are all there are. Obviously, that's wrong.

Can

you imagine the smell of leaves burning? Can you imagine the

taste

of a steak? Do you hear an inner voice? (Uh-oh.)

Just a taste of this. Smith, Reisberg, and Wilson (1992) had

people reinterpret strings like “NE1 4 10S” as “anyone for tennis,” but

silently. People could interpret 73% of the strings in a quiet

room.

With auditory distraction, they could do 40%. With articulatory

suppression,

21%. In other words, the more you engaged the vocal hardware, the

worse their inner voice imagery got.

This area is rich in WRR opportunities.

Top

VIII. Reality monitoring. Have you ever had a dream

that you later confused with a real experience? (Uh-oh.) Do

you sometimes experience déjà vu? Have you ever

remembered

something that didn't really happen? These are all problems with

reality monitoring. How do you distinguish imagined events from

real

ones, especially if they use the same neural hardware, as we've been

discussing

above?

The basic answer is a process of summing up perceptual information

and information about cognitive activity associated with the memory,

and

comparing the two. A lot of cognitive with little perceptual is

probably

imagined. A lot of perceptual and some cognitive is probably

real.

Test? Johnson, Foley, and Leach (1988) had people imagine words

being spoken by someone else (whose voice they were familiar with) and

then had that person read some words out loud. This caused more

confusion

than imagining words in their own voice or some other person's

voice.

In other words, changing the balance between perceptual information and

internal information made discriminating between real and imagined

harder.

Finke, Johnson, and Shyi (1988) showed that the less attention you

pay when you're creating images (or the less work you do), the harder

it

is to separate imagination from reality. People had to complete

shapes

like the following:

When the shapes were letters and numbers, it was hard to tell which

had been seen whole and which were imagined whole. When the

shapes

were novel (taking more effort to complete) people were pretty good at

the task.

Brain-wise, two parts are implicated in reality monitoring.

People

with frontal lobe damage have trouble with confabulation of “memories”

that are false. It is difficult or impossible to persuade them

that

those events did not occur. People who have their temporal lobes

stimulated tend to experience déjà vu. It appears

to

arise as a result of reality monitoring, but the exact mechanism is

unclear.

That should just about clear everything up on the déjà vu

front (he says, tongue-in-cheek).

(This is a great section for a WRR. Some WRRs I've had for this

topic: McNally, R.J., & Kohlbeck, P.A. (19

).

Reality monitoring in obsessive compulsive disorder. Behavior

Research

and Theory, 31, 249-253; Anderson, R. (1984). Did I do it

or

did I only imagine doing it?)

Top

Cognitive Psychology Notes 5

Will Langston

Back to Langston's Cognitive Psychology

Page