Langston, Psychology of Language, Notes 6 -- The

Lexicon

I. Goals:

A. Words.

A. Meaning.

B. Routes to access/Influences on access.

C. Lexical organization.

II. Words.

A. What is a word? Pinker proposes two distinct meanings:

1. A syntactic atom. A word is a unit that can't be divided

further by the grammar rules that make sentences. The word itself can

be a product of a morphological grammar, but the process that produced the

word left a final product that is a single unit as far as syntax is concerned.

Some examples of words by this meaning: electric (root),

crunch (root), shoes (inflected by the morpheme -s

to mean "plural of shoe"), crunchable (derived from crunch

by adding the morpheme -able to mean "capable of being crunched"),

toothbrush (compound), and Yugoslavia report (compound with

a space).

2. A rote memorized chunk of "linguistic stuff" that is paired with

an arbitrary meaning. These elements are called listemes, and they

can also be any length, the criterion is that a listeme is an element whose

meaning and form have to be associated. The listemes will make up the

mental lexicon, which we'll discuss later.

By the second definition, how many words do people know? By one estimate

in Pinker, a high school graduate knows around 45,000 words. Throw

in proper names, foreign words, etc. and Pinker guesses around 60,000. This

presents two huge problems:

a. How do you manage to learn all of that? A high school graduate

would have to have learned 10 new words a day, every day, since their first

birthday to get 60,000 words. A preliterate child has to pick up a

new word every two hours, every day. This is another of Pinker's arguments

for language being special and possibly innate. What else can preliterate

kids learn one of every two hours? And remember and use mostly correctly

for the rest of their lives? Remember, whatever this other thing is,

it has to be totally arbitrary (imagine learning a new word in a foreign

language every two hours). In the next section, we'll see a little

of the argument for how a little innate predisposition can really help this

process.

b. Once you have 60,000 words in your head, how do you access the

correct meaning for the word you're currently supposed to recognize in the

incredibly short amount of time you have to do it? We'll also discuss

lexical access in a bit, but it's a challenge.

B. How are words formed? One approach is to make words out

of smaller elements in the same way that sentences are made of words.

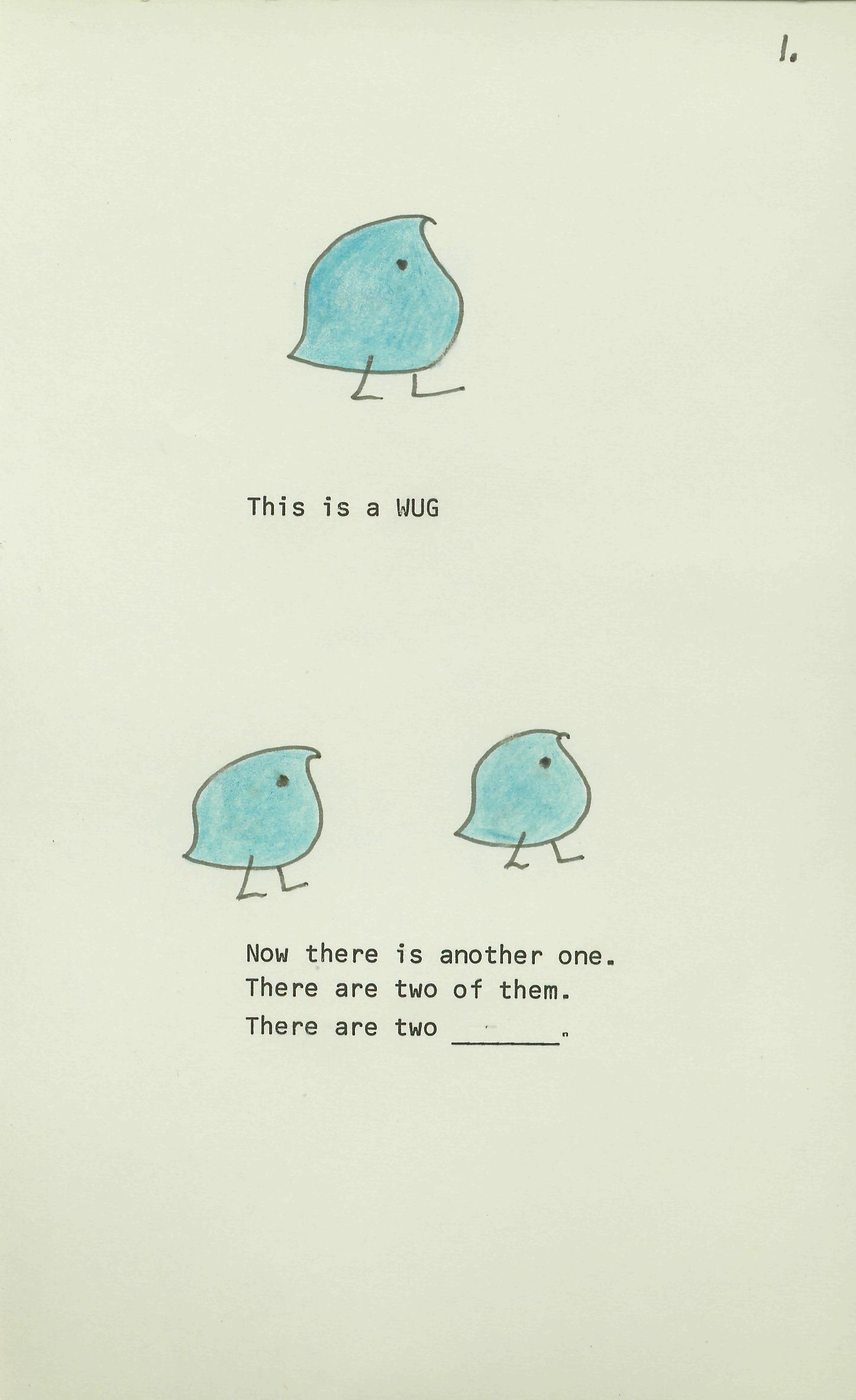

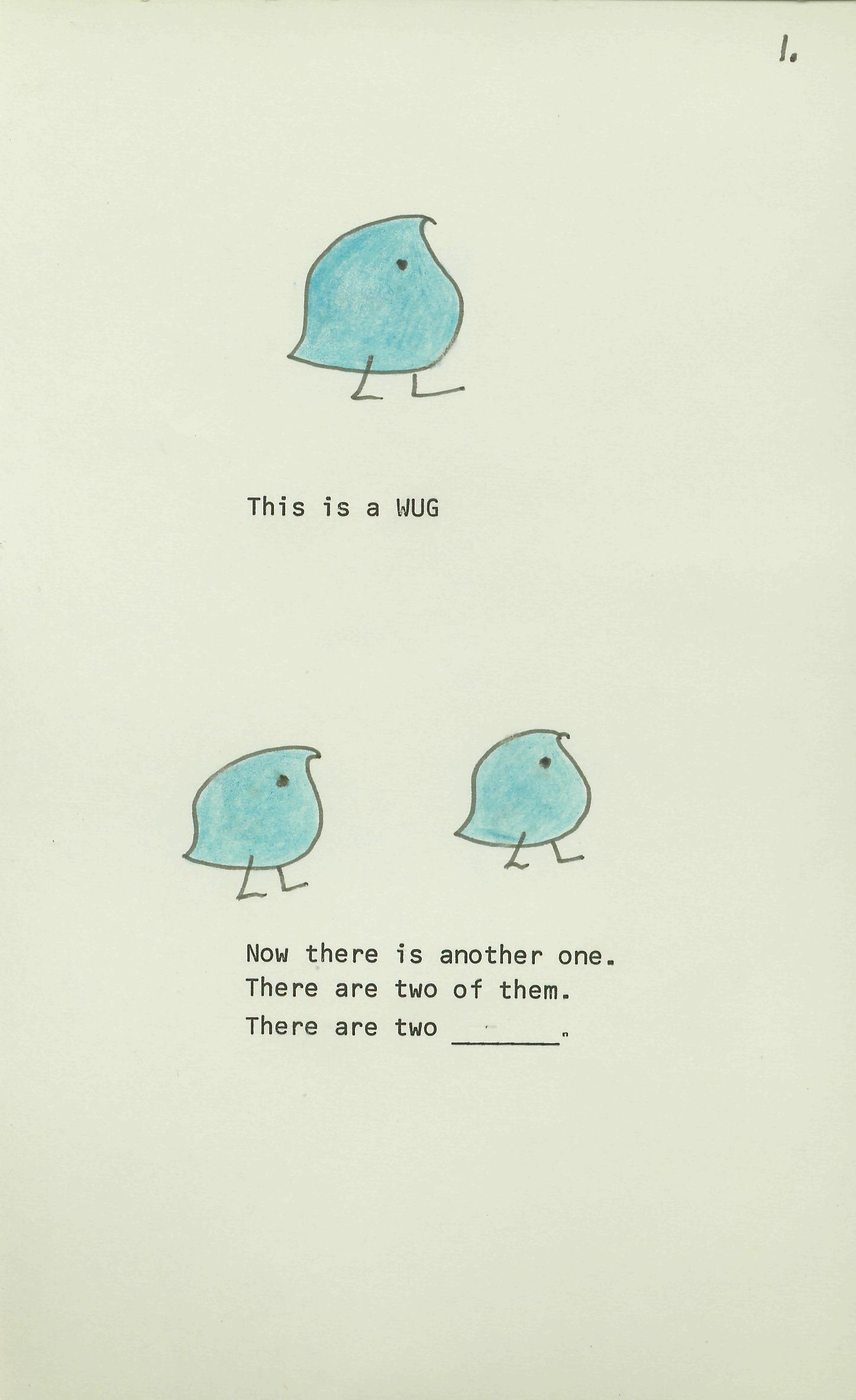

1. The wug test (one image from the CHILDES website is included

below; follow the link and check out the first picture of wug aficionados)

shows that kids seem to do this. Show a picture to a kid and say "this

is a wug, now there are two, now there are two ____" and await a response.

Kids say wugs. They must be using a rule (add -s

for plural) since they've never seen a wug before, and never had anyone

model or reinforce the plural of wug.

2. If word formation is rule based, how does it work? We'll

need some elements and some rules (a grammar):

a. First rule: N -> Nstem + Ninflection (a noun is a noun

stem plus noun inflection). Nstems are things like dog, shoe,

and inflections are things like -s (plural).

b. Also: Nstem -> Nstem + Nstem to produce words like Yugoslavia

report and toothbrush. This also allows Nstems to feed back in for

words like toothbrush-holder fastener box.

c. Also: Nstem -> Nroot + Nrootaffix to produce stems from

roots. Note that some of the morphemes are going to apply only to

roots, and others to stems. This will explain why some words (Darwinianisms)

sound more OK than others (Darwinismian).

d. Plus a lot more which you can find in Pinker. The basic

idea is that words are just like the rest of language in that it's possible

to work out a grammar to describe their formation.

3. As Pinker notes, English is kind of weak in inflectional morphology

(two noun forms, e.g., duck and ducks, and four verb forms,

e.g., quack, quacks, quacked, and quacking).

Most languages are a lot more powerful than that. However, for

derivational morphology English is pretty respectable. You can fool

around with combining some of the morphemes with one another to see this

in action:

-able

|

-ate

|

-ify

|

-ize

|

-age

|

-ed

|

-ion

|

-ly

|

-al

|

-en

|

-ish

|

-ment

|

-an

|

-er

|

-ism

|

-ness

|

-ant

|

-ful

|

-ist

|

-ory

|

-ance

|

-hood

|

-ity

|

-ous

|

-ary

|

-ic

|

-ive

|

-y

|

Take one of the longer real words in English: antidisestablishmentarianism.

Try to derive the meaning based on the morphemes that have been piled

on. As Pinker notes, it doesn't make a lot of sense to claim to have

the longest word in English, because you can always put on another morpheme.

Take floccinaucinihilipilification (the categorizing of something

as worthless or trivial, and one letter longer than antidisestablishmentarianism).

Floccinaucinihilipilificationalizational is "pertaining to

the act of causing something to pertain to the categorizing of something

as worthless or trivial." And one could go on.

Top

III. Meaning.

A. Before you listen to me talk about meaning, I want you to think

about it. What does it mean to mean? When I say a word has a

particular meaning, what is that? What is the meaning of a word like

"bank"?

If we were kids first learning language, we'd have one big problem.

How do you know what the words are? We've looked at some physical features

that might help with that. Now we turn to the other side of the coin.

If you can figure out what the words are, how do you know what they mean?

Quine called it the gavagai problem. A rabbit runs by and someone says

"gavagai." Are they saying "rabbit," "furry thing," "running," "things

with tails," etc. You don't really know. But, according to Pinker,

kids have some built-in rules for assigning meanings to words. Essentially,

chop the world into individual things, classes of things, and actions.

If you combine this with how people categorize, it makes the task easier.

Kids can count on adults operating on the basic level (more specific than

"some sort of animal," less specific than "a specific breed of rabbit").

So, kids can assume it's "rabbit."

The "dax" test is supposed to show this. If you show kids tongs and

call them "dax," and ask for another dax, they pick a different kind of

tong. They assume dax is the name of that kind of thing. But,

if you call a cup dax, and they already know the word "cup," they assume

you're picking out a property of cups. If you ask for more dax when

you call a cup dax, they pick out items made of the same material, or items

with handles.

What is the representation of meaning that kids are forming when they learn

words?

B. Meaning can be broken up into four types:

1. Referential: What the word refers to. It's the concrete

object in the world. So, if I say "The president is a clown", the

referential meaning is "George Bush" (or whoever is president).

2. Denotative: The generic concept meaning that underlies the

word. Your president concept has definitional stuff (leader of the

country), as well as a lot of other information (can veto legislation, four-year

term), etc. All of this is part of the denotative meaning. How

is this organized? One proposal is by use of feature lists. I'll

illustrate with the concepts of man and woman:

| Feature |

Man |

Woman |

(Boy) |

Living

Animal

Mammal

Human

Female |

+

+

+

+

- |

+

+

+

+

+ |

+

+

+

+

- |

The features are any property that the concept has. You just list

all that you can think of (or all you need) to uniquely classify everything

in your lexicon. This can be pretty tough. Some problems:

a. What happens when you add something like boy to the list of concepts?

Without a new feature, it looks just like man. Do you have to add a

new feature for every new concept? If so, then why use features at

all (you have as many features as concepts, just store them as lists)?

What would information theory suggest about this problem?

b. How do I rate the importance of the features? A feature

that is relevant here (like female) is not as important as when I'm classifying

trees or furniture. Should it be treated as equally important?

What value does female get when I'm putting computer in my list of concepts?

3. Associative meaning: Everything you think of when you hear

the word. Just write down all of the related words.

a. Where does it come from? Common expressions (coffee, tea,

or milk), experience (we rarely see tables without chairs), antonyms (black-white,

good-bad,...), units (ding-dong).

b. How do we measure associative similarity? Count the concepts

in common. The more shared associates, the more similar two concepts

are.

c. How do you form associations? Two ways:

1). Network: Related concepts are closer in distance.

As you access a concept in your network, close stuff comes out.

2). Features: Run through the list of features flipping them.

For man you'd get female.

4. Affective meaning: How the words make you feel. Measured

using the semantic differential. It's a scale with a list of word

pairs, and you mark on a line where a particular concept falls. I've

illustrated with my version of psycholinguistics:

Concept = psycholinguistics

Angular -*-------- Round

Weak --------*- Strong

Rough --------*- Smooth

Active --*------- Passive

Small --------*- Large

Cold ----*----- Hot

Good -*-------- Bad

Tense ---*------ Relaxed

Wet --------*- Dry

Fresh --*------- Stale

The 10 pairs reduce to three semantic dimensions:

a. Evaluation: Good/bad.

b. Activity: Active/Passive.

c. Potency: Strong/Weak.

With these three dimensions, you can classify any concept's affective meaning.

This is useful for looking at differences between groups (like Americans

vs. Iraqis on the concept USA).

Top

IV. Routes to access/Influences on access.

A. Routes to access: One way to determine the organization

is to use the tip of the tongue (TOT) phenomenon. If you put a person

in a TOT state, and then ask what they can tell you about the word, you can

find out how the lexicon is organized. Let's try it for a few words.

(Go through the demonstration.)

The general idea is that any method of perceiving that you have at your

disposal has to be able to access your stored meanings for concepts:

1. Vision: Reading.

a. Words.

b. Partial words (what's the meaning of a--a--in).

2. Vision: If you see it, you can access its meaning.

(Q: Does an object have meaning outside of my assignment of meaning

to it?)

3. Audition: Speech: The words I'm saying are accessing

meaning.

4. Audition: If you hear the sound an object makes, that can

access meaning.

5. Touch: Touching an object can tell you its meaning.

6. Smell: The smell it makes.

7. Taste.

The point: However we organize the lexicon, it's got to be accessible

by at least these methods. Plus, there are probably many more we can

think of. So, our representation has to be very flexible.

B. Influences on access: Things that can speed it up or slow

it down

1. Frequency: In general, the more frequently you encounter

something the easier it is to access its meaning (true of words, objects,

sounds, etc.).

2. Morphology: Morphemes are the meaning units. These

are words, but they're also things like plural (-s), past tense (-ed), ...

The more morphemes you have in a word you're looking up, the slower it is.

It looks like the organization is root word (dog) plus morphological rules

(-s = plural; -ed = past...).

3. Syntactic category: Nouns are faster than verbs.

4. Priming: Experience with related concepts will speed access

(either a previous word that's related or a sentence context that's related).

5. Ambiguity: If it's present, access is slower. There

can be syntactic ambiguity (pardon is a verb and a noun) and semantic ambiguity

(our old pal bank).

The point: Our models have to be affected by these things as well.

Top

V. Lexical organization. Now that we know what meaning

is and what affects accessing meaning, we need to think about how it's organized.

A. Network models. (Collins and Loftus) Your concepts

are organized in a collection of nodes and links between the nodes.

The nodes are organized in a hierarchy:

1. Properties:

a. Distance matters: The farther apart two concepts are the

less related they are.

b. Two kinds of relationships:

1) Superset: The category it's in. So, bird is the superset

of canary.

2) Property: Properties it has (has-wings is a property of

canary).

c. You can have many kinds of property links (has-, is-, can-, might-...).

d. Cognitive economy: Store things one time at the highest

place they apply. So, breathes is a property of all animals, store

it at the animal node instead of with every animal in the network (part of

the Collins and Loftus is responding to problems with this concept).

2. Evidence: Do the semantic verification task: Verify

"a canary is a canary" vs. "a canary is a bird". The more links you

have to travel, the longer it takes to verify.

3. Problems:

a. Cognitive economy: Tests of this have failed to support

it. Not fatal.

b. Hierarchy: "A horse is an animal" is faster than "a horse

is a mammal". That's not right if it's a hierarchy.

c. Answering no: To say no, the concepts must be far apart

(a tree has wings). But, saying no is fastest of all. That really

hurts networks.

B. Feature models. Concepts are clusters of semantic features

(like above with man and woman).

1. Properties: Words are clusters of features. Two kinds:

a. Distinctive: The defining features. Sort of core features

(like wings for birds).

b. Characteristic: Typical features, not required (can fly

for birds).

2. Evidence: One thing they're good at is verification of sentences

like "a whale is a fish." People are slowed more by this than by "a

horse is a fish." The reason is the features in common: whales

have more in common with fish than horses. Network models can't produce

this result

3. Problems: Getting the features is hard (impossible).

The distinction of characteristic features is particularly hard. Write

down characteristic features of bachelor. How does the Pope fare with

those features? Is the pope a bachelor? How about a widower?

Is he a bachelor?

Top

Psychology of Language Notes 6

Will Langston

Back to Langston's Psychology of Language Page