Langston,

Cognitive Psychology, Notes 11 -- Language--Structure

I. Goals.

A. Where we are/Themes.

B. Syntax.

C. Meaning.

D. Influences.

II. Where we are/Themes. We've already looked at

some parts of language. When we did our ecological survey, we

considered

visual letter features, orthography (spelling patterns), auditory

features,

and some sound patterns. When we looked at knowledge

representation,

we also looked at word meanings (semantic networks, some basic

propositions).

Now, we're turning our attention to the real comprehension

issues:

How is language understood, and what influences that understanding?

A. We're going to break this into three basic categories:

1. Syntax: Word order information. Sentences are

more than arbitrary strings of words. Some words have to go in

some

places, other words in other places. Getting the order

information

out of the sentence may do a lot for your comprehension.

2. Meaning: Knowing the order is a good start, but meaning

is more than that. We'll look at propositions and mental models

and

other attempts to model the meaning of sentences.

3. Influences: A lot of factors influence the recovery

of structure and meaning, we'll look at some of the big ones.

B. As we do this, two things are going to be really important:

1. Grammars: A grammar is a set of elements and the rules

for combining those elements. Grammars can be used to explain

almost

every aspect of language comprehension.

2. Ambiguity: At every level, language inputs are

ambiguous.

Part of what's going to drive our models of language is an approach to

deal with ambiguity. You can use two approaches:

a. Brute force: Try to represent every possibility.

Not good given the limited capacity of working memory.

b. Strategies: Much more reasonable. Use what you

know about language from past experience and apply it to current

problems.

If a particular sentence structure usually has one interpretation, go

with

that as your first try to understand it.

Top

III. Syntax. What's a grammar for word order?

Some possibilities:

A. Word string grammars (finite state grammars): Early

attempts to model sentences treated them as a string of

associations.

If you have the sentence "The boy saw the statue," "the" is the

stimulus

for "boy," "boy" is the stimulus for "saw,"... If a speaker is

processing

language, the initial input is the stimulus for the first word, which,

when spoken, becomes the stimulus for the next word, ...

These ideas get tested with "sentences" of nonsense words. If

you make people memorize "Vrom frug trag wolx pret," and then ask for

associations

(by a type of free association task), you get a pattern like this:

| Cue |

Report |

vrom

frug

trag |

frug

trag

wolx... |

It looks like people have a chain of associations. This is

essentially

the behaviorist approach to language.

Chomsky had a couple of things to say in response to this:

1. Long distance dependencies are problematic. A long

distance

dependency is when something later in a sentence depends on something

earlier.

For example, verbs agree in number with nouns. If I say "The dogs

that walked in the grass pee on trees," I have to hold in mind that

plural

"dogs" take the verb form "pee" and not "pees" for five words.

Other

forms of this are sentences like "If...then..." and

"Either...or..."

In order to know how to close them, you have to remember how you opened

them.

2. Sentences have an underlying structure that can't be

represented

in a string of words. If you have people memorize a sentence like

"Pale children eat cold bread," you get an entirely different pattern

of

association:

| Cue |

Report |

pale

children

eat

cold

bread |

children

pale

cold or bread

bread

cold |

Why? "Pale children" is not a pair of words. It's a noun

phrase. The two words produce each other as associates because

they're

part of the same thing. To get a representation of a sentence,

you

need to use (at least) a phrase structure grammar. That's our

next

proposal.

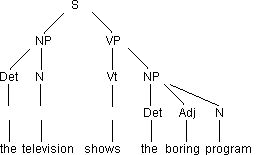

B. Phrase structure grammars (a.k.a. surface structure

grammars):

Generate a structure called a phrase marker when parsing (analyzing the

grammatical structure of the sentence). It proceeds in the order

that the words occur in the sentence, and the process is to

successively

group words into larger and larger units (to reflect the hierarchical

structure

of the sentence). For example:

(1) The television shows the boring program

The phrase marker is a labeled tree diagram that illustrates the

structure

of the sentence. The phrase marker is a result of the parsing.

What's the grammar? It's a series of rewrite rules. You

have a unit on the left that's rewritten into the units on the

right.

For our grammar (what we need to parse the sentence above) the rules

are:

P1: S -> NP + VP

P2: VP -> {Vi or Vt

+ NP}

P3: NP -> Det (+

Adj) + N

L1: N ->

{television, program}

L2: Det -> {the}

L3: Vt -> {shows}

L4: Vi -> {}

L5: Adj -> {boring}

The rules can be used to parse and to generate.

C. Transformational grammars: There are some constructions

you can't handle with phrase structure grammars. For example,

consider

the sentences below:

(2) John phoned up the woman.

(3) John phoned the woman up.

Both sentences have the same verb ("phone-up"). But, the "up"

is not always adjacent to the "phone." This phenomenon is called

particle movement. You can't parse or generate these sentences

with

a simple phrase-structure grammar. Problems like this were part

of

the motivation for transformational grammar's development.

Chomsky

is also responsible for this insight.

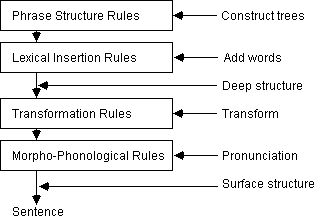

What is transformational grammar? Add some concepts to our

phrase-structure

grammar (both technical and philosophical):

1. The notion of a deep structure: Sentences have two

levels

of analysis: Surface structure and deep structure. The

surface

structure is the sentence that's produced. The deep structure is

an intermediate stage in the production of a sentence. It's got

the

words and a basic grammar.

2. Transformations: You get from the deep structure to

the surface structure by passing it through a set of transformations

(hence

the name transformational grammar). These transformations allow

you

to map a deep structure onto many possible surface structures.

3. Expand the left side of the rewrite rules:

Transformation

rules can have more than one element on the left side. This is a

technical point, but without it you couldn't do transformations.

Why do we need to talk about deep structure? It explains two

otherwise difficult problems:

1. Two sentences with the same surface structure can have very

different meanings. Consider:

(4a and 4b) Flying planes can be dangerous.

This can mean either "planes that are flying can be dangerous" or "the

act of flying a plane can be dangerous." If you allow this

surface

structure to be produced by two entirely different deep structures,

it's

no problem.

2. Two sentences with very different surface structures can have

the same meaning. Consider:

(5) Arlene is playing the tuba.

(6) The tuba is being played by Arlene.

These both mean the same thing, but how can a phrase structure grammar

represent that? With a deep structure (and transformations) it's

easy.

Let's get into this with Chomsky's Toy Transformational Grammar.

1. Outline: When producing a sentence you pass the basic

thought through four stages:

2. Rules: You have rules at each stage:

a. Phrase structure rules:

P1: S -> NP + VP

P2: NP -> Det + N

P3: VP -> Aux + V

(+ NP)

P4: Aux -> C (+ M)

(+ have +

en) (+ be + ing)

b. Lexical insertion rules:

L1: Det -> {a, an,

the}

L2: M -> {could,

would, should,

can, must, ...}

L3: C -> {ø,

-s (singular

subject), -past (abstract past marker), -ing (progressive), -en (past

participle),

...}

L4: N -> {cookie,

boy, ...}

L5: V -> {steal,

...}

c. Transformation rules:

Obligatory

T1: Affix (C) + V ->

V + Affix

(affix hopping rule)

Optional

T2: NP1 + Aux + V + NP2 ->

NP2

+ Aux + be + en + V + by + NP1 (active Æ

passive transformation)

d. Morpho-phonological rules:

M1: steal -> /stil/

M2: be -> /bi/ etc.

3. Some examples:

a. Produce the sentence "The boy steals the cookie."

To see these, check someone's notes. They're too complex to

produce

here.

b. Produce "The cookie is stolen by the boy."

To see these, check someone's notes. They're too complex to

produce

here.

3. Psychological evidence for transformations. Note before

we start that getting this evidence is problematic. That said,

here's

some data.

a. Early studies rewrote sentences into transformed

versions.

For what follows, the base sentence is "the man was enjoying the

sunshine."

Negative: "The man was not enjoying the sunshine."

Passive: "The sunshine was being enjoyed by the man."

Question: "Was the man enjoying the sunshine?"

Negative + Passive: "The sunshine was not being enjoyed by the

man."

Negative + Question: "Was the man not enjoying the sunshine?"

Passive + Question: "Was the sunshine being enjoyed by the man?"

Negative + Passive + Question: "Was the sunshine not being

enjoyed

by the man?"

I have you read lots of these and measure reading time. The more

transformations you have to undo, the longer it should take. That

happens. Note the problem with unconfounding reading time from

the

number of words in the sentence.

b. Understanding negatives. I present you with a long list

of statements to verify. Some are positive statements, some are

negative

statements. Untransforming the negative statements should cause

trouble.

D. Wrap-up. There's more to this story. These

grammars

also have things they can't handle. We're stopping now because

you

have a pretty good idea how this works. Let's look at the other

side

of the coin.

Top

IV. Meaning.

A. Syntax models have some problems:

1. Not very elegant. The computations can be pretty

complicated.

2. Overly powerful. Why this set of transformations?

Why not transformations to go from "The girl tied her shoe" to "Shoe by

tied is the girl"? There's no good reason to explain the

particular

set of transformations that people seem to use.

3. They ignore meaning. Chomsky's sentence "They are

cooking

apples" isn't ambiguous in a story about a boy asking a grocer why he's

selling ugly, bruised apples. Sentences always come in a context

that can help you understand them. So, let's yak about

meaning.

See the demonstration for an example of context influencing meaning.

Demonstration: Someone volunteer to read this passage

out

loud.

"Cinderella was sad because she couldn't go to the dance that

night.

There were big tears in her brown dress."

How about this passage?

"The young man turned his back on the rock concert stage and looked

across the resort lake. Tomorrow was the annual fishing contest

and

fishermen would invade the place. Some of the best bass

guitarists

in the world would come to the spot. The usual routine of the

fishing

resort would be disrupted by the festivities."

Was there a problem? What caused it? What did you do to

fix it?

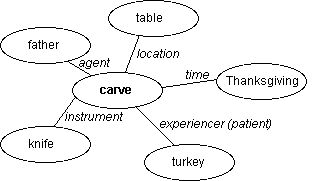

B. Semantic grammars: The PSG and transformational grammars

parse sentences with empty syntactic categories. For example, NP

doesn't mean anything, it's just a marker to hold a piece of

information.

Semantic grammars are different in spirit. The syntactic

representation

of a sentence is based on the meaning of the sentence. For

example,

consider Fillmore's (1968) case-role grammar. Cases and roles are

the part each element of the sentence plays in conveying the

meaning.

You have roles like agent and patient, and cases like location and

time.

The verb is the organizing unit. Everything else in the sentence

is related to the verb. Consider a parse of:

(7) Father carved the turkey at the Thanksgiving dinner

table with his new carving knife.

The structure is:

The nodes here have meaning. You build these structures as you

read, and the meaning is in the structure. You can do things

purely

syntactic grammars can't. For example, consider:

(8) John strikes me as pompous.

(9) I regard John as pompous.

If you analyze these two syntactically, it's hard to see the

relationship

between the "me" in (8) and the "I" in (9). But, there is a

relationship.

Both are the experiencer of the action. One problem is the number

of cases.

C. We can also look at meaning using propositions. I

defined

propositions last time. When we talk about reading and look at

the

Kintsch model, propositions will come into play. I'll hold off

until

then.

Top

V. Influences. What determines how you attach

meaning

to language? The process operates at several levels.

A. Words.

1. Frequency: In general, the more frequently you encounter

something the easier it is to access its meaning (true of words,

objects,

sounds, etc.). To compute frequency we count the number of times

a word appears in a representative sample of written English (or spoken

English). This has a huge impact on speed of understanding.

2. Morphology: Phonemes are groups of sounds that are

treated

as the same sound by a language. Changing a phoneme will change

the

meaning (bit to pit). But, the phoneme has no meaning (/b/ and

/p/

alone don't mean anything). Morphemes are the meaning

units.

These are words, but they're also things like plural (-s), past tense

(-ed),

... The more morphemes you have in a word you're looking up, the

slower it is. It looks like the organization is root word (dog)

plus

morphological rules (-s = plural; -ed = past...).

3. Syntactic category: Nouns are faster than verbs.

4. Priming: Experience with related concepts will speed

access (either a previous word that's related or a sentence context

that's

related). The more context you have to tell you the meaning, the

more help you should have looking it up. This context effect

sometimes

happens and sometimes doesn't.

5. Ambiguity: If it's present, access is slower.

There can be syntactic ambiguity (pardon is a verb and a noun) and

semantic

ambiguity (our old pal bank).

B. Lexical access: Getting at the meaning. Two

possibilities:

1. Search: Automatic process that goes through a list

arranged

by frequency until it gets a match. The process is not affected

by

context. After access happens, you check the recovered meaning

against

the context.

Support: Bimodal listening: Listen to a sentence about

a guy looking for bugs in a conference room before an important secret

meeting. After the sentence (or during) visually present a string

of letters. The person says if the string of letters makes a

word.

The trick: The strings that make words can be related to the

meaning

of "bugs," "SPY" or "ANT." The context biases towards just one

meaning.

But, soon after the word "bugs," both meanings appear to be activated.

2. Direct access: The memory is content-addressable,

meaning

you focus right in on what you're looking for instead of

searching.

This model is influenced by context.

Support: Neighborhood effects. A neighborhood is all of

the orthographically similar words to a target word. For example,

the neighborhood around game is: gave, gape, gate, came, fame,

tame,

lame, same, name, gale. Neighborhoods can be big or small.

The neighborhood around film is small: file, fill, firm. If

neighborhood size affects access, it's evidence for context effects

(kind

of like word superiority). Neighborhood size does affect access.

As an aside gesture also seems to affect lexical access.

Frick-Horbury

and Guttentag (1998) showed that people whose hands were restricted

retrieved

fewer words given the definition that people who could move their

hands.

So, for example, if I give you this definition "a slender shaft of wood

tipped with iron and thrown for distance in an athletic field event,"

you

should retrieve "javelin." Somehow, holding a rod (which

restricts

hand movements) interferes with retrieval. Think about what we

said

about semantic memory last time. Can those models incorporate

embodiment?

C. Sentences. Most of the action has to do with

ambiguity.

You have to break the sentence up into its components, and then you

have

to link those components into some kind of structure. To do this,

you have some strategies. People have a very limited capacity

working

memory. This means that any processes we propose have to fit in

that

capacity. The problem of trying to do it with limited resources

will

be the driving force behind the strategies. They're ways to

minimize

working memory load. One way to minimize load is to make the

immediacy

assumption: When people encounter ambiguity they make a decision

right away. This can cause problems if the decision is wrong, but

it saves capacity in the meantime. Consider a seemingly

unambiguous

sentence like:

(10) John bought the flower for Susan.

It could be that John's giving it to Susan, but he could also be buying

it for her as a favor. The idea is that you choose one right

away.

Why? Combinatorial explosion. If you have just four

ambiguities

in a sentence with two options each, you're maintaining 16 possible

parses

by the end. Your capacity is 7±2 items, you do the

math.

To see what happens when you have to hold all of the information in a

sentence

in memory during processing, try to read:

(11) The plumber the doctor the nurse met called ate the

cheese.

The problem is you can't decide on a structure until very late in the

sentence, meaning you're holding it all in memory. If I

complicate

the sentence a bit but reduce memory load, it gets more comprehensible:

(12) The plumber that the doctor that the nurse met called

ate the cheese.

Or, make it even longer but reduce memory load even more:

(13) The nurse met the doctor that called the plumber that

ate the cheese.

Now that we've looked at the constraints supplied by working memory

capacity and the immediacy assumption, let's look at the

strategies.

Note: These are strategies. They don't always work, and you

won't always use them. But, it's a good solution to the brute

force

problem.

1. Getting the clauses (NPs, VPs, PPs, etc.).

a. Constituent strategy: When you hit a function word,

start a new constituent. Some examples:

Det: Start NP.

Prep: Start PP.

Aux: Start VP.

b. Content-word strategy: Once you have a constituent

going,

look for content words that fit in that constituent. An example:

Det: Look for Adj or N to follow.

c. Noun-verb-noun: Overall strategy for the sentence.

First noun is agent, verb is action, second noun is patient.

Apply

this model to all sentences as a first try. Why? It gets a

lot of them correct. So, you might as well make your first guess

something that's usually right. We know people do this because of

garden-path sentences (sentences that lead you down a path to the wrong

interpretation). Example:

(14) The editor authors the newspaper hired liked laughed.

You want authors to be a verb, but when you find out it isn't, you

have

to go back and recompute.

d. Clausal: Make a representation of each clause, then

discard the surface features. For example:

(15) Now that artists are working in oil prints are

rare.

(863 ms)

vs.

(16) Now that artists are working longer hours oil prints

are rare. (794 ms)

In 15, "oil" is not in the last clause, in 16 it is. The access

time for "oil" after reading the sentence is in parentheses. When

it's not in the current clause, it takes longer, as if you've discarded

it.

2. Once you get the clauses, how do you hook them

up? More strategies:

a. Late closure: The basic strategy is to attach new

information

under the current node. Consider the parse for:

(17) Tom said Bill ate the cake yesterday.

(We'll need some new rules in our PSG to pull it off, I'm skipping

those to produce final phrase markers. Again, the markers are too

complex to reproduce here.)

According to late closure, "yesterday" modifies when Bill ate the

cake,

not when Tom said it (is that how you interpreted the sentence?)

It could modify when Tom said it, but that would require going up a

level

in the tree to the main VP and modifying the decision you made about it

(that it hasn't got an adverb). That's a huge memory burden (once

you've parsed the first part of the sentence, you probably threw out

that

part of the tree to make room). So, late closure eases memory

load

by attaching where you're working without backtracking.

Evidence: Have people read things like:

(18) Since J. always jogs a mile seems like a very short

distance to him.

With eye-tracking equipment, you can see people slow down on "seems"

to rearrange the parse because they initially attach "a mile" to jogs

when

they shouldn't.

b. Minimal attachment: Make a phrase marker with

the fewest nodes. It reduces load by minimizing the size of the

trees

produced. Consider:

(19) Ernie kissed Marcie and Joan...

The markers are too complex to reproduce here.

The minimal attachment tree has 11 nodes vs. 13 for the other

(depending

on how you count). It's also less complex. The idea is that

if you can keep the whole tree in working memory (you don't have to

throw

out parts to make room), then you can parse more efficiently.

Evidence: Consider:

(20) The city council argued the mayor's position

forcefully.

(21) The city council argued the mayor's position was

incorrect.

In (21) minimal attachment encourages you to make the wrong tree and

you have to recompute.

3. Ambiguity. What does ambiguity do to the processing

of sentences? Loosely speaking, it makes things harder.

(Note:

In the next unit we'll look at individual differences in dealing with

ambiguity.

This has a large impact on reading skill.) MacKay (1966) asked

participants

to complete sentences. They could be ambiguous, such as:

(22) Although he was continually bothered by the cold...

(cold)

(23) Although Hannibal sent troops over a week ago...

(over)

(24) Knowing that visiting relatives could be bothersome...

(visiting relatives)

The ambiguous part of each sentence is in parentheses. Completing

these ambiguous versions took longer than completing non-ambiguous

counterparts.

Note that ambiguity is really a part of any language understanding

task.

If it weren't for ambiguity, comprehending language would be easy to

understand.

Top

Cognitive Psychology Notes 11

Will Langston

Back to Langston's Cognitive Psychology

Page